Wow, it’s been a very long time since I last published any post. I prepared quite a few in the meantime but never polished them up for publication. However, since this issue appears to be reoccuring right now (March 2016) I’ve decided to finally put it online. Please do not mistake any time-related information in this post for up-to-date, as I last updated this post 2 years ago and I’m going to publish it largely unrevised now.

I originally wrote this post back in March 2014 with full names in it. I hoped that the service I encountered problems with would get fixed but it wasn’t as of May 2014. I still won’t name it directly to avoid any legal trouble but it should be enough to just check your config for these points if you encounter problems with any servers on the Internet. In the meantime, I’ve seen more than just this one service having the same issues.

There’s a web service that caught my interest lately (ehm… back in 2014 ;) ), so it came that I wanted to access it from my home computer having an IPv6 connection via SixXS/NetCologne. To my surprise, I was unable to establish a connection via IPv6, only IPv4. I didn’t find the problem at that moment so I just forced access via IPv4 to work around that issue. A few weeks later, after having deployed IPv6 at work (also SixXS via NetCologne), I noticed the same effect there as well. Strangely, a friend had no problems accessing it via either IPv6 or IPv4 so I figured that there might be a routing issue or the company (or their CDN) may be blocking certain connections that do not match geo-lookups done via DNS. Since I had the (slightly unprofessional) impression that “the Internet was broken” around early March (at least if you were using Deutsche Telekom as ISP), I put that issue aside and revisited it only later.

The service was still inaccessible via IPv6 from my networks but the friend, having native IPv6 from Deutsche Telekom, could access it without problems. We compared DNS but Telekom DNS, Google DNS and NetCologne DNS always resolved to the same addresses, so there shouldn’t be any issue with geo-lookups. Finally, I found a thread where other people experienced the same issue and suspected the MTU size and missing ICMPv6 to be a problem. Oookay…?

So apparently, the operator deployed IPv6 to their servers and missed that ICMPv6 is mandatory for IPv6 to work properly. The issue appears to not have made it to their network operations department yet, so nobody fixed it on their end so far. And indeed: Setting the MTU to 1280 locally made the service to be instantly reachable. Let’s investigate what happened here as it’s mainly (but not solely) the operators fault:

Fixing the MTU on your local network

On your local end, you are using a higher MTU than 1280 (the required minimum MTU to be routed on the Internet). That is a bit unfortunate if the first hops of your upstream provider already use lower MTUs than your local defaults (usually 1500 on Linux or 1400 on Windows). What happens at this point is Path MTU Discovery, since IPv6 routers do no longer do packet fragmentation on their own (as opposed to IPv4): If your clients are sending packets that do not fit through a router’s outbound interface for the route to be taken, the router discards your packet and replies with an ICMPv6 “Packet Too Big” message which includes the MTU for its outbound interface. Your client saves that Path MTU (“pmtu”) in its route cache (Linux: ip -6 route show table cache) and retransmits the failed packet with fragmented size to match that individual MTU. This repeats until the route is fully traversable and your packets reach their actual destination. If your upstream provider is set to use a MTU of 1280 (changeable default for SixXS tunnels) and your clients try a MTU of e.g. 1500, “Packet Too Big” is being sent by your local router or – at latest – by your provider’s gateway for almost every connection you try to establish (since 1280 bytes are easy to be exceeded). Let’s see how that discovery looks like to an unrelated website with tracepath6 after forcing MTU to 1500:

# sysctl net.ipv6.conf.br0.mtu=1500; ip -6 route flush cache; tracepath6 www.heise.de

net.ipv6.conf.br0.mtu = 1500

1?: [LOCALHOST] 0.038ms pmtu 1500

1: xxxx:xxxx:xxxx:xxxx:: 5.936ms

1: xxxx:xxxx:xxxx:xxxx:: 1.215ms

2: xxxx:xxxx:xxxx:xxxx:: 1.229ms pmtu 1280

2: gw-XXXX.cgn-01.de.sixxs.net 29.395ms

3: 2001:4dd0:1234:3::42 29.967ms asymm 2

4: core-eup2-ge1-22.netcologne.de 29.940ms asymm 3

5: core-eup1-vl501.netcologne.de 29.940ms asymm 4

6: rtamsix-te4-2.netcologne.de 33.667ms asymm 5

7: ams-ix-v6.nl.plusline.net 38.434ms asymm 8

8: te2-4.c101.f.de.plusline.net 139.257ms asymm 7

9: 2a02:2e0:3fe:ff21:c::2 37.920ms asymm 8

10: 2a02:2e0:3fe:ff21:c::2 37.935ms !A

Resume: pmtu 1280

We can see that PMTU starts with 1500 (the device’s default) but my local router (IP masked with xxxx) replied with “Packet Too Big”, indicating that the MTU for that path should be 1280, hence a PMTU of 1280 is being used to continue.

As I said, that’s a bit unfortunate as it means almost every connection attempt is delayed by “Packet Too Big” and the path cache for all external connections sooner or later starts filling up with PMTUs of 1280:

# ip -6 route show table cache

2a02:2e0:3fe:1001:7777:772e:2:85 via fe80::xxxx:xxff:fexx:xxxx dev br0 metric 0

cache expires 594sec mtu 1280

Apart from manually setting the interface MTUs on all your clients this can be fixed via Router Advertisements by announcing the MTU of your Internet uplink or, if unsure, simply by announcing the minimum MTU of 1280. It’s done by AdvLinkMTU if you are using radvd or by setting the router’s local interface’s MTU to the size to be used if you are using dnsmasq for instance. Upon receiving those RAs, your clients should reconfigure to that MTU immediately. When using MTU 1280, your clients should not need to rely on path MTU discovery any more (at least unless you hit routers that violate standards even further).

Back to the broken service: Why is it a problem that they block ICMPv6 if I can fix that issue locally?

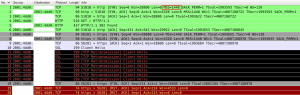

This left me puzzled for a moment until I compared three packet dumps in Wireshark (service with MTU 1500 and broken PMTU discovery, service with MTU 1280, youtube.com with MTU 1500 and working PMTU discovery). You can see the packet dump of a stalled connection attempt with broken PMTU discovery below:

- The initial TCP SYN packet the local client sends to create a new connection contains the Maximum Segment Size (MSS) which is equal to the MTU of the outbound interface to be used minus some bytes for packet headers. This declares the MTU to be used by the other end initially. If the client uses a small MTU such as 1280, MSS will be set accordingly which means the other end will fragment packets appropriately right from the beginning, no PMTU discovery required.

- At some point, a packet sent by either side may be too big and a router replies to the sender with ICMPv6 “Packet Too Big” and the MTU to be used instead. The end that receives that message adjusts its Path MTU and retransmits the packet fragmented to match the new PMTU. Apparently, the server tries to send more than 1280 bytes in reply to my SSL “Client Hello”. That’s actually too big for my gateway or some other router in between, so the server is being sent a “Packet Too Big” message which is ignored and thus the connection stalls on both ends as the server can’t get past the router with lower MTU.

This leads to two issues:

- If the other end is blocking ICMPv6 (for related connections) it cannot adjust its Path MTU although routers reply to it with “Packet Too Big” messages. If thought further, this may lead to an accumulation of dead connections on server-side which is most likely nothing you would like to have resource-wise. There should be two easy server-side fixes: Either allow ICMPv6 for related connections, so PMTU Discovery can work as it should, or always transmit with the minimum MTU of 1280 bytes instead of a higher local MTU and regardless of the TCP MSS. If connections are common to fail with any MTU higher than 1280 bytes it may be good practice for heavy-load servers to use a fixed MTU of 1280 anyway. (Please note that this assumption may not be correct and was one of the reasons I did not publish this post back in 2014 – I just didn’t find time to verify my claims…)

- Unfortunately, it appears that the other end isn’t notified about the change in PMTU on one side, so unless it hits a limitation itself, it does not adjust its PMTU as well (which makes some sense since routing is not uncommon to be asymmetric, so there may be different PMTUs for each direction). In theory, if one end would be able to notify the other about a lowered MTU after TCP SYN, this would still require one end to discover the correct PMTU before the other. In this case it would not have helped as the client may request the web page with a smaller packet than 1280 bytes (in my case the largest packet sent prior to connection stall had 516 bytes). One workaround that could be implemented on clients is that the connection should be retried with a MTU of 1280 instead if connection stalls. Note that this may not work for all application protocols in all cases; in particular no remote action must have been triggered before a connection retry (which would have worked in this case as the SSL handshake for HTTPS failed, so no action should have been taken by the server yet).