I bought a HTC Hero last year in September and was quite happy with it. HTC’s custom Sense UI looked far better than the default Android UI. It also shipped with better applications, less Google bundling and pioneered some basic multitouch support (only in the browser and the photo app) and integration with Flickr, Facebook and Twitter.

Sense’s initial release was very sluggish (see older reviews on YouTube) but has just become fixed when I ordered my phone. After that, there was one more update in November without information on what has been fixed with it. Since it required yet another wipe and was still Android 1.5 (Codename Cupcake) and everything worked fine for me so far, I decided not to install it.

It’s the end of May now, 6 months since that last update, 8 months since the UI fix and when I got my phone. Android 1.6 (Donut) was released the day after I bought my phone, providing new features such as VPN connections, text-to-speech support, a new market application and multiple screen resolutions. I would call the last feature the most important one since newer devices required it and soon applications started requiring that API, resulting in older Android versions not being able to run them (they are simply hidden from the market). In preparation of the Droid release in November Android 2.0 (Eclair) was released just one month later, along with support for newer hardware (camera flash, OpenGL 2.0 ES) it also added more Bluetooth profiles (up to that point Android phones could only interact with headsets) and a better Bluetooth API as well as Google Voice Search among others. If I remember correctly, this was mainly used by the Droid and no other phone at that time. It was Android 2.1 (also Eclair) that shipped these new features to phones from other manufacturers since January but not without adding some more features such as live wallpapers. HTC said they would skip 1.6 and go directly to 2.0/2.1 for the Hero. Android 2.1’s release date was timed with the release of Nexus One which was manufactured by HTC. Last week, Android 2.2 (Froyo = Frozen yogurt) was released, bringing (among others) WiFi and USB tethering without the need of rooting your device.

Sprint released a 2.1 update for their customized Hero last week, one week after another customized revision called “Droid Eris” got the update. Hero owners using the plain GSM phones (in contrast to CDMA by US operators) are still waiting in vain for a release. There were multiple release dates, both rumored and official, each cancelled a few days before or simply missed. According to phandroid.com HTC now has yet another release date for us: A first preliminary update should roll out someday in June and the final 2.1 update should follow “a couple of weeks later” which could easily mean July or August regarding their previously announced dates. HTC also said, that 2.2 would come to all phones released in 2010 – that excludes the Hero for now…

Actually, Google released Android 1.6, 2.0 and 2.1 much too fast for any manufacturer to keep up with in time, that’s true. Also, HTC’s custom UI called Sense has to be ported to each Android revision they release an update for. But HTC already released a phone running Sense on 1.6. So assuming, they stopped support for Sense@1.5 at latest in November, they now had 6 months to tinker at ports to newer Android versions and prepare an update for the Hero. And they did: Not only did Droid Eris and Sprint Hero get an update in the past weeks, they also released a lot of phones previously, developing even more. All have similar hardware and starting from the Hero all of their Android phones have Sense UI.

So why didn’t HTC release any 2.1 update for the Hero yet? Considering that at least the Sprint Hero could have had its update so “soon” only because the network operator Sprint may have built its own release, I’ve got the notion that HTC is building throw-away phones: If one gets outdated, just dispose it and buy a new one; maintenance will only run for a few months and then suddenly stops. This may have worked for conventional phones in the past but since smartphones run on a common operating system that is often maintained by another company, that’s the completely wrong path to keep the Android platform healthy and customers as well as developers satisfied. By healthy I mean that a device should usually support all public API levels until the hardware becomes insufficient which shouldn’t be that much of a problem with smartphones which are nothing but PDAs with a cellular radio chip. However, since Android is open source and modifications to the Linux kernel have to be published under GPL, Android phones should be open to custom upgrades and so is the HTC Hero.

Unfortunately I’m legally unable to link or name any of the custom ROMs I’ve found but if you do a standard Google search you will most likely find them quite easily. They either run the plain Android system or incorporate (pirated) copies of Sense UI from leaked or previously released images. Since I’m not the only one who is sick of HTC’s poor excuses, there seems to exist a variety of ROMs specifically targeting the Hero. Reading into what’s necessary to get an update, I’ve found out that HTC is actually making it difficult to flash an inofficial ROM onto the phone. This involves unlocking a special diagnosis mode and signed files; things I would not have expected at all from a badly maintained open source based phone! Although I didn’t try it myself, it seems like even versions containing ripped Sense binaries run surprisingly fine (with some minor bugs and inconveniences) on the Hero, so the question remains: Why does HTC delay updates if a community of a few unrelated developers is able to build almost completely working releases?

To make things worse, it seems like at least a few released 2.1 images introduced a jail lock that appears to not have been broken completely yet. So you are strongly advised to check the news on this topic before installing any official HTC updates from now on or you may not be able to ever go further than Android 2.1.

Why does this happen? What are they thinking? I start to regret having bought my Hero with the wrong expectation to have a modifiable phone that doesn’t outdate for a few years due to an evolving open source operating system. All I can do now is to warn other people from buying HTC’s phones without prior investigation of possible issues. While other Android phones may also be several months late lagging behind Google’s SDK releases, that may be excusable up to some point (especially since Google sprinted ahead with their releases since 1.6). The day the Hero finally receives its 2.1 update, other phones will already get their updates to 2.2 and I doubt HTC will continue to update the Hero further. Since a jail lock may be introduced to the normal Hero by the preliminary update in June I would strongly advise considering either getting a custom ROM instead or live with 1.5 and wait for more information (is HTC serious about 2.1 and will the Hero get 2.2?). Once you installed that update you may not be able to go further without buying a new phone although the hardware would be capable of it. All I know for sure is that I won’t buy another HTC phone in near future.

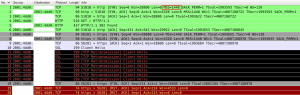

As of May 17, Android currently has an almost equally distributed version fragmentation across 1.5, 1.6 and 2.1. In the advent of 2.2 and the final updates to 2.1 I would expect that we are one or two months from finally calling 1.5 (the third API level) “legacy”. This means that the number of devices running 1.5 will drop significantly low and therefore less and less applications will run on 1.5 due to API updates more current applications may prefer to use (with Android 2.2 we have reached API level 8). The 34,1% share of Android 1.5 may in fact contain a large percentage of Heros since almost every other phone has had updates and the Hero sold pretty well. Developers have or will have to choose whether they want to maintain a legacy “Hero revision” of their software or not. Thus by holding back OS updates, HTC is not only upsetting its customers but is annoying developers as well, so its highly unlikely they will support a 10% or less share of 1.5 users. Running out on updates in less than one year isn’t what Android was meant to be nor what I would expect from a 400€ device.